With the advent and growth of artificial intelligence (AI) in audit, the topic of trust comes up repeatedly in discussions. Auditors have always relied on their credibility to build and maintain relationships with clients. Auditors must build their own confidence in AI technologies before convincing clients and regulators that they have achieved the same level of assurance, if not more, than traditional methods.

At MindBridge, we have been asking ourselves for some time: How can we build this confidence for our customers?

To support auditors in their assessment of AI as a viable option, we commissioned a third-party audit of the algorithms used in our risk discovery platform. This independent assessment by UCLC (University College London Consultants) is an industry first, providing a high level of transparency to any user of MindBridge technology and assurance that our AI algorithms operate as expected.

While the independent report is only available to customers, we’ll summarize the activities and results here.

Ethical AI and MindBridge

AI and machine learning (ML) are the most influential and transformative technologies of our time, leading to legitimate questions around the creation and application of these systems. Will AI-based algorithms influence potentially life-altering decisions? How are these systems secured? Are audit firms required to prove the credibility of their AI tools?

The ethics of AI sees continual press and social media coverage because the technology shapes how we interact with the world, and defines how aspects of the world interact with us.

“AI ethics is a set of values, principles, and techniques that employ widely accepted standards of right and wrong to guide moral conduct in the development and use of AI technologies.”

- Understanding artificial intelligence ethics and safety, The Alan Turing Institute

The biggest companies, from Amazon to Google to Microsoft, recognize the ethical issues that arise from the collection, analysis, and use of massive sets of data. The ICAEW, in its “Understanding the impact of technology in audit and finance” paper, says that it is “crucial for the regulators to develop their capabilities to be in a position to effectively regulate these sectors in the face of advances in technology.”

MindBridge has long realized that transparency and explainability are critical for the safe and effective adoption of AI, with a demonstrated commitment to the ethical development of our technology.

Why third-party validation matters

“80% of respondents say auditors should use bigger samples and more sophisticated technologies for data gathering and analysis in their day-to-day work. Nearly half say auditors should perform a deeper analysis in the areas they already cover.”

– Audit 2025: The Future is Now, Forbes Insights/KPMG

The most significant difference between traditional audit approaches and an AI-based one is in the effectiveness of the auditors’ time. An AI audit analytics platform can search 100% of a client’s financial data such that auditors can avoid large samples in low risk areas, focusing their time instead on areas of high judgement and audit risk.

Due to the increased effectiveness of AI-based tools, regulators, audit firms, and their clients now consider data analytics an essential part of the industry’s business operations. With such a widespread impact, no one should blindly trust technology that has the potential for misinterpretation or misuse.

Auditors are known for assessing risk and gaining reasonable assurance. AI is just another example where skilled, third-party technology validation must be performed.

How the audit of MindBridge algorithms was achieved

The third-party audit of MindBridge algorithms was performed by UCLC, a leading provider of academic consultancy services supported by the prestigious UCL (University College London). UCLC’s knowledge base draws from over 6,500 academic and research staff covering a broad range of disciplines, and includes clients from international organizations, multinational enterprises, and all levels of government. UCL’s reputation as a world leader in artificial intelligence meant they were the right partner for MindBridge in completing this audit.

The goal of the audit was to verify that the algorithms used by our automated risk discovery platform are sufficient in three areas:

- The algorithms work as designed

- What the algorithms do while operating

- Sufficiency of MindBridge processes with regards to algorithm performance review, the implementation of new algorithms, and algorithm test coverage

For auditors and the accounting industry, the importance of this type of report cannot be understated:

“Auditors have to document their approach to risk assessment in a way that meets the auditing standards. They are also required to clearly document conclusions they make over the populations of transactions considered through the course of audit. Where this analysis is being conducted through MindBridge, there is therefore a burden of proof to demonstrate that the software is acting within the parameters understood by the auditor.”

– 3rd Party Conformity Review (Algorithm Audit) for MindBridge, UCLC

The first step was for the UCLC auditors to identify potential risks in the MindBridge algorithms across four sets of criteria:

Robustness of the algorithm

Split into correctness and resilience, this set of criteria validates that the algorithms perform as expected, react well to change, and are documented well. Generally, these are rated against the ability of the algorithm to score risk properly and work properly across a range of inputs and situations.

For example, many of the techniques employed by MindBridge audit analytics are used to identify unusual financial patterns, such as those required by the ISA 240 standard. One set of these techniques falls under “outlier detection,” a form of ML that doesn’t require pre-labelled training data and as such, reduces the potential to bring bias into the analysis.

The limitation of this unsupervised ML is that it has no deep or specific knowledge of accounting practices. MindBridge adopts the concept of an ensemble, or augmenting with different techniques, to bring domain expertise into the analysis. Called Expert Score, this allows the analysis to identify the relative risk of unusual patterns by combining human expert understanding of business processes and financial monetary flows with the outlier detection.

AI explainability

Split into documentation and interface components, this set of criteria validates that the algorithms and their purposes are easy to understand for users. This is critical for financial auditors in providing clear and meaningful expectations and key to building confidence in their clients.

“When making use of third-party tools, audit trails are vital, and auditors should ensure that they are able to obtain from providers clear explanations of a tools function, including how it manipulates input data to generate insights, so that they are able to document an audit trail as robust as one that could be created with any internally developed tool.”

– Response to Technological Resources: Using technology to enhance audit quality, Financial Reporting Council

Privacy

These criteria apply to the effectiveness of controls relevant to the security, availability, and processing integrity of the system used to process client information. Privacy is closely linked to the prevention of harm, whether financial or reputational. AI systems must guarantee privacy and data protection throughout the lifecycle and be measured against the potential for malicious attacks.

Bias and discrimination

This applies to the effectiveness of controls in place to prevent unfairness in AI-based decision making. As MindBridge algorithms don’t use or impact data from identifiable individuals, this category presents limited risk.

Methodology

The assessment was done by conducting numerous tests and research to grade performance along a scale from “faulty” to “working as intended or passed the tests” for the sets of criteria above. This included:

- Using different settings and data as input into the AI algorithms and recording the results

- Comparing the results of validation code against the results of the AI platform code

- Conducting interviews with the CTO and key data science, software development, and infrastructure personnel to determine processes and controls for systems development, operationalization, security, and testing

- Assessing the data science and software development expertise of the MindBridge team

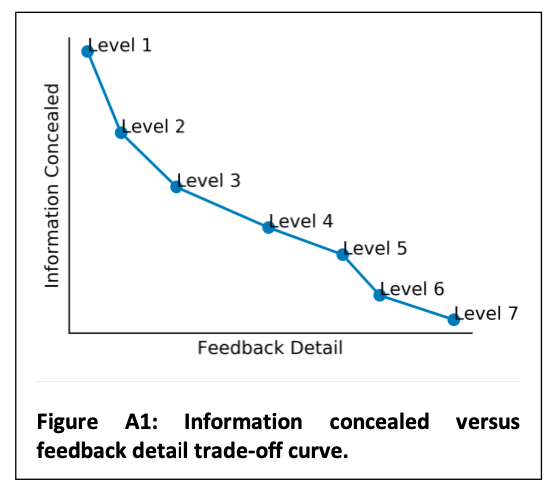

The UCLC auditors were granted a level 7, or “Glass-box,” access to the algorithms. This is the most transparent level available to an assessment and allowed the audit to cover all details of the algorithms.

All MindBridge algorithms passed the assessment and the auditor’s report is available upon request to customers, regulators, and others who must rely on our algorithms.

Conclusion

With completion of the independent, third-party audit of its algorithms, MindBridge demonstrates clear evidence for AI-based tools to support the financial audit process safely and effectively. Through this assessment, MindBridge further enables the audit of the future by helping firms build confidence with their clients on the value of making AI and audit analytics an essential part of business operations.

For auditors, this announcement makes it easier to place further reliance on the results of the MindBridge artificial intelligence, allowing auditors to sample fewer items and spend more time where it matters most. It’s a key stepping stone in building credibility for AI in audit, and we hope that such third-party algorithm audits become the standard across the sector.

To learn about MindBridge’s most recent verification journey with Holistic AI visit our blog.

For more information on how AI and automated risk discovery supports your firm, download this free eBook now:

Automated risk discovery: What is it, and how firms can achieve it